Using GPUs for CFD simulation has been around for a long time, but recently more CFD solvers have been updated to run their entire code inside the GPU to take full advantage of the power of recent NVIDIA cards including the A100 and older V100. Multi-GPU solvers include Ansys Fluent, SIEMENS STAR-CCM+, CONVERGE, ultraFluidX, Particleworks, and many others.

There can be many caveats for the type of models that can run completely in a GPU, but if your model meets all the requirements the speedups can be impressive. TotalCAE makes it easy to use GPUs with any of these CFD applications.

multi-GPU Support in Ansys Fluent

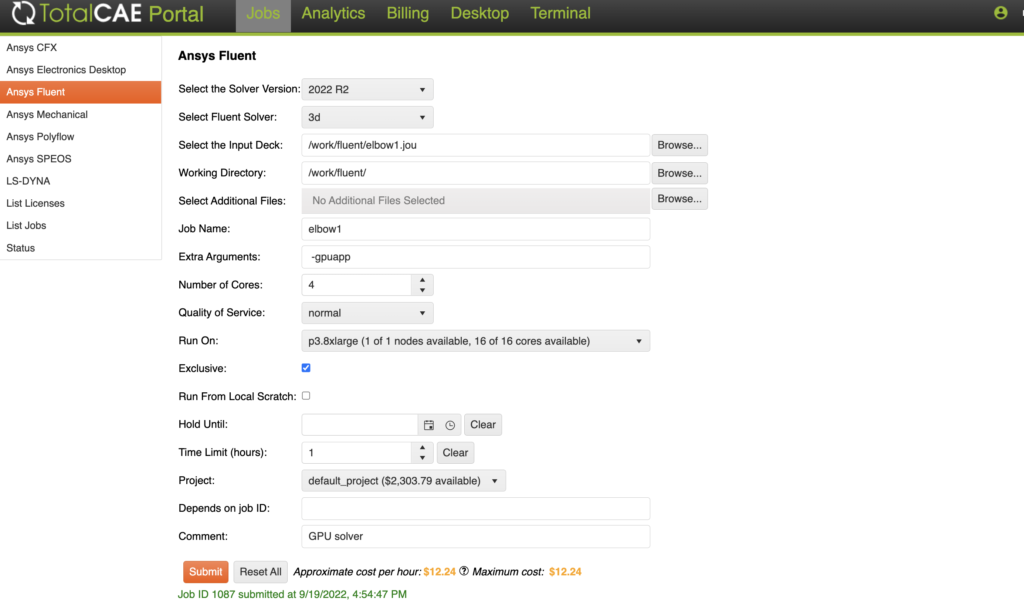

For Ansys Fluent, just add the GPU option and pick a V100/A100 GPU-enabled on-prem node or cloud node to utilize the GPU. Since these codes run in the GPU, you will select the number of GPUs instead of CPUs. TotalCAE will calculate the required Ansys licensing required with the GPUs, and make sure your job does not run until sufficient licenses are available.

multi-GPU Support in Particleworks

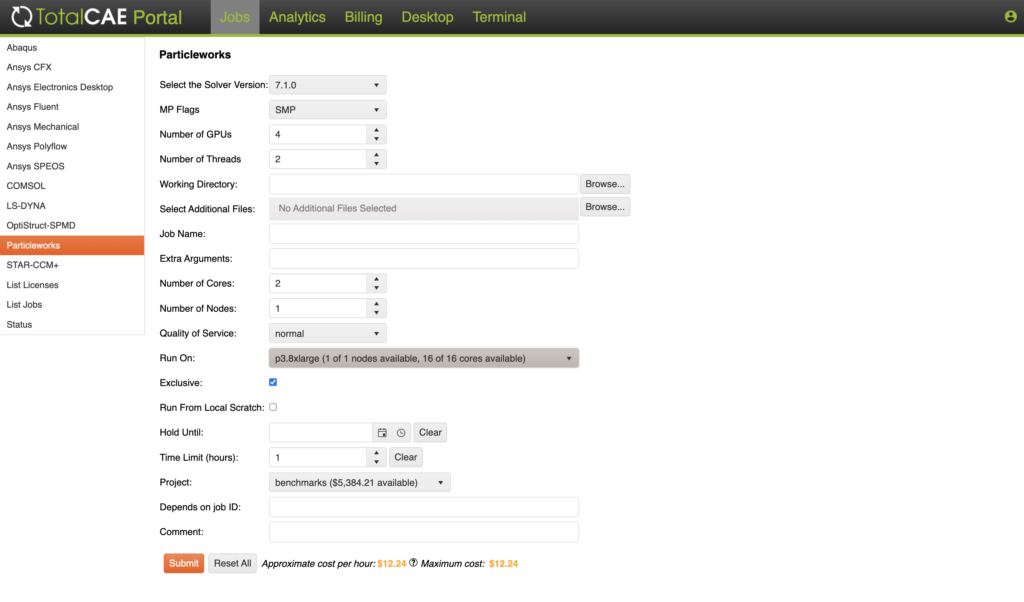

Particleworks users can have fine-grained control of the number of GPUs and other hardware resources, TotalCAE can calculate the appropriate number of licenses required based on your options so your job will queue and wait for the required licensing.

Licensing for multi-GPU

Most CFD vendors have made it simple to adopt the GPU by making it relatively low cost from a licensing perspective. For example, Ansys Fluent treats a GPU as 1 core, you are very likely to get much more than the 1 CPU equivalent of power in a GPU (if your model is compatible and works).

TotalCAE can automatically calculate the licensing for your solver and model even when using GPUs, so that the jobs will queue if you don’t have sufficient CFD licensing to run.

Does the multi-GPU only solver save money on hardware or cloud costs?

It is not a slam dunk that using multi-GPU only solvers will save you on hardware costs, primarily due to the fact that the NVIDIA A100 is $24,000 each, and the GPU may not completely eliminate the need for CPU horsepower for all your models and applications.

For cost savings potential, a multi-NVIDIA A100 GPU server costs around 100K to purchase or lease. You could get 392 compute cores on-prem for a similar price point. If you need to run other models that don’t work on a GPU only node, or other applications on the cluster, then the larger number of compute nodes may be a better fit in terms of cost and flexibility for running a variety of models and codes. Some clients are adding a multi-GPU node for CFD to free up the cluster for other applications or models that are not GPU compatible, and using it for AI/ML usage which runs on the same type of hardware.

On the cloud, a multi-GPU node such as the AWS p4d.24xlarge costs around $33 per hour to rent compared to a 96-core server that costs $2.88 to run. However, the cloud has a nice advantage, that if your model doesn’t work on the GPU nodes or costs more to solve your particular job, just choose a CPU-only option. TotalCAE on AWS and TotalCAE on Azure lets you pick from a variety of cloud hardware, and shows you all your job costs, estimates, and final job costs to make it easy for you to determine which option is best for your model.